The script worked fine, I thought. Now I realized if the movie size was modest (hundreds of frames), it is ok. But if it is thousands, now I see significant slowing down of the process, like from 1.5 sec/frame to 2~3 sec/frame (Laptop PC Let’s Note).

How can I solve this? Here is the ChatGPT’s suggestion.

スレッドプールの「適切な数」は、システムのCPUやタスクの性質に基づいています。特に、CPUバウンドのタスクでは、C++コードの最適化とスレッドプールの使い方が鍵です。

スレッド数の選定

スレッドプールの「適切な数」は、通常、使用可能なCPUコアの数に基づいています。cv2.matchTemplateがGILを解放する場合、スレッド数を多くすることも考慮されます。

タスクのためのスレッド数

CPUバウンドのタスクには、最大スレッド数をシステムの論理CPU数に合わせるのが良い。タスクの待機状態を避けるため、スレッド数をシステムに最適化している。

CPU割り当ての違いを探る

Let’s Noteは6秒、DeepStationは12秒。DeepStationのCPU配分がうまくいっていない可能性を模索中。

最適なスレッド数を見極める

cv2.matchTemplateを使用している場合、タスクの数をシステムのCPUコア数に合わせる必要がある。過剰なスレッドはパフォーマンスの悪化を招く可能性がある。

タスクの負荷を見極める

cv2.matchTemplateを使っていると、タスクの負荷が大きくなりがち。タスク数をシステムのコア数に合わせることが重要だね。

タスクの最適配分を探る

cv2.matchTemplateを使ってると、タスクをシステムのコア数に合わせるのが大事。タスク数を増やすと、空きコアがなければスレッドが溢れるかもね。

企業の物流戦略を探る

企業が物流コストを内外製品に均等に分配し、ニッチな分野の製品を特定の業者と提携して供給するアプローチを選択している。

o3-mini-high

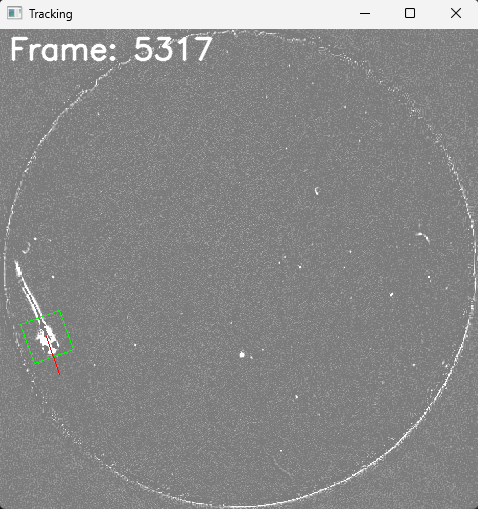

I tested the suggested change. I also commented out the image saving part. Now it seems no delay in the processing at least at 5200 frame (2.2 sec / frame, Desktop PC).

import os

os.cpu_count()

フレーム 0 処理中 2025-02-05 14:34:30.5

フレーム 1 処理中 2025-02-05 14:34:32.9

フレーム 2 処理中 2025-02-05 14:34:35.8

フレーム 3 処理中 2025-02-05 14:34:38.2

フレーム 4 処理中 2025-02-05 14:34:40.3

フレーム 5 処理中 2025-02-05 14:34:42.5

フレーム 6 処理中 2025-02-05 14:34:44.7

フレーム 7 処理中 2025-02-05 14:34:46.9

フレーム 8 処理中 2025-02-05 14:34:49.0

フレーム 9 処理中 2025-02-05 14:34:51.1

フレーム 10 処理中 2025-02-05 14:34:53.3

フレーム 5190 処理中 2025-02-05 17:48:40.8 フレーム 5191 処理中 2025-02-05 17:48:43.2 フレーム 5192 処理中 2025-02-05 17:48:45.8 フレーム 5193 処理中 2025-02-05 17:48:48.1 フレーム 5194 処理中 2025-02-05 17:48:50.3 フレーム 5195 処理中 2025-02-05 17:48:52.5 フレーム 5196 処理中 2025-02-05 17:48:54.8 フレーム 5197 処理中 2025-02-05 17:48:57.0 フレーム 5198 処理中 2025-02-05 17:48:59.2 フレーム 5199 処理中 2025-02-05 17:49:01.3 フレーム 5200 処理中 2025-02-05 17:49:03.6

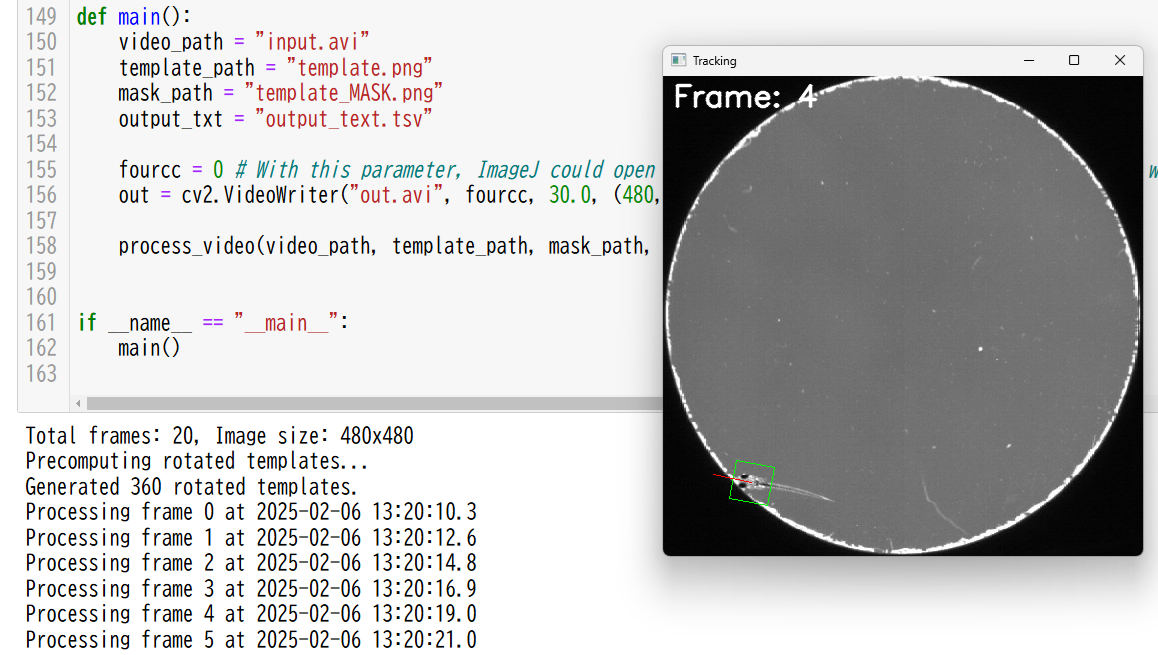

Script name: template matching (larval fish tracking) parallel ThreadPool.jpynb

The major change is that the ThreadPoolExecutor was moved in the function def process_video from the funciton def template_matching_with_rotation_parallel in the script so that we do not need call executor everytime. Not sure if this was the cause or saving the image was the cause.

def process_video(avi_path, template_path, mask_path, output_txt):

# システムの論理コア数に合わせたスレッドプールを動画全体で使い回す

with ThreadPoolExecutor(max_workers=os.cpu_count()) as executor:

Template matching (larval fish tracking) parallel ThreadPool.jpynb

# Template matching (larval fish tracking) parallel ThreadPool.jpynb # 20250206AM with ChatGPT import cv2 import numpy as np from datetime import datetime import time from concurrent.futures import ThreadPoolExecutor import os def rotate_image(image, angle): """Rotate the image by the specified angle.""" h, w = image.shape center = (w // 2, h // 2) rotation_matrix = cv2.getRotationMatrix2D(center, angle, 1.0) rotated_image = cv2.warpAffine(image, rotation_matrix, (w, h), flags=cv2.INTER_LINEAR) return rotated_image def apply_mask(template, mask): """Apply a mask to ignore the background.""" return cv2.bitwise_and(template, template, mask=mask) def preprocess_rotated_templates(template_img, mask_img, angle_step=1): """Precompute rotated templates with applied masks for 360 degrees.""" rotated_templates = {} for angle in range(0, 360, angle_step): rotated_template = rotate_image(apply_mask(template_img, mask_img), angle) rotated_mask = rotate_image(mask_img, angle) rotated_templates[angle] = (rotated_template, rotated_mask) return rotated_templates def compute_match(angle, rotated_template, rotated_mask, target_frame): """Perform template matching for a given angle.""" match_result = cv2.matchTemplate(target_frame, rotated_template, cv2.TM_CCOEFF_NORMED, mask=rotated_mask) _, max_val, _, max_loc = cv2.minMaxLoc(match_result) return angle, max_val, max_loc, match_result def template_matching_with_rotation_parallel(target_frame, rotated_templates, executor): """Perform parallel template matching using precomputed rotated templates.""" best_match = None best_angle = None max_corr = -1 result_map = None futures = [] for angle, (rotated_template, rotated_mask) in rotated_templates.items(): futures.append(executor.submit(compute_match, angle, rotated_template, rotated_mask, target_frame)) for future in futures: angle, max_val, max_loc, match_result = future.result() if max_val > max_corr: max_corr = max_val best_match = max_loc best_angle = angle result_map = match_result return best_match, best_angle, max_corr def draw_rotated_box(image, center, w, h, angle): """Draw a rotated bounding box.""" box = cv2.boxPoints(((center[0] + w // 2, center[1] + h // 2), (w, h), -angle)) box = np.intp(box) cv2.polylines(image, [box], isClosed=True, color=(0, 255, 0), thickness=1) # Compute the center of the rotated box center_x = np.mean(box[:, 0]).astype(int) center_y = np.mean(box[:, 1]).astype(int) corrected_center = (center_x, center_y) # Draw an upward direction line before rotation line_length = h # Line length angle_rad = np.radians(angle) # Convert angle to radians end_x = int(corrected_center[0] - line_length * np.sin(angle_rad)) end_y = int(corrected_center[1] - line_length * np.cos(angle_rad)) # Draw the line cv2.line(image, corrected_center, (end_x, end_y), color=(0, 0, 255), thickness=1) return image, corrected_center def process_video(video_path, template_path, mask_path, output_txt, out): cap = cv2.VideoCapture(video_path) total_frame_count = int(cap.get(cv2.CAP_PROP_FRAME_COUNT)) frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) print(f"Total frames: {total_frame_count}, Image size: {frame_width}x{frame_height}") template_img = cv2.imread(template_path, cv2.IMREAD_GRAYSCALE) mask_img = cv2.imread(mask_path, cv2.IMREAD_GRAYSCALE) if template_img is None or mask_img is None: print("Failed to load template or mask image.") return _, mask_img = cv2.threshold(mask_img, 128, 255, cv2.THRESH_BINARY) print("Precomputing rotated templates...") rotated_templates = preprocess_rotated_templates(template_img, mask_img) print(f"Generated {len(rotated_templates)} rotated templates.") with ThreadPoolExecutor(max_workers=os.cpu_count()) as executor: frame_count = 0 with open(output_txt, "w") as f: f.write("Frame\tX\tY\tAngle\tCorrelation\n") while cap.isOpened(): formatted_time = datetime.now().strftime("%Y-%m-%d %H:%M:%S.%f")[:-5] print(f"Processing frame {frame_count} at {formatted_time}") ret, frame = cap.read() # print(f"Frame shape: {frame.shape}" if frame is not None else "Frame is None") if not ret: break # End of video frame_gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) best_match, best_angle, max_corr = template_matching_with_rotation_parallel(frame_gray, rotated_templates, executor) x, y = best_match h, w = template_img.shape _, corrected_center = draw_rotated_box(frame, (x, y), w, h, best_angle) x_center, y_center = corrected_center f.write(f"{frame_count}\t{x_center}\t{y_center}\t{best_angle}\t{max_corr:.4f}\n") cv2.putText(frame, f"Frame: {frame_count}", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 255, 255), 2, cv2.LINE_AA) out.write(frame) cv2.imshow("Tracking", frame) if cv2.waitKey(1) & 0xFF == ord('q'): # This line was necessary for imshow() to work. break frame_count += 1 cap.release() out.release() cv2.destroyAllWindows() print(f"Processing complete! Results saved to {output_txt}.") def main(): video_path = "input.avi" template_path = "template.png" mask_path = "template_MASK.png" output_txt = "output_text.tsv" fourcc = 0 # With this parameter, ImageJ could open the generated avi file. Other options did not work with ImageJ. out = cv2.VideoWriter("out.avi", fourcc, 30.0, (480, 480)) process_video(video_path, template_path, mask_path, output_txt, out) if __name__ == "__main__": main()

When I ran the above script on an Ubuntu 16 laptop PC, there was no delay in processing each frame, even with a 36,000-frame AVI file. Depending on PC’s ability (CUP clock speed and the number of cores), it takes 1.5~2 sec for each frame to be processed with my PCs.